3.1

- uragan1987

2017-12-09T18:07:58:478 [01924] | ERROR | Failed to compile 'C:\Games\steamapps\common\PUBG\TslGame\Binaries\Win64\reshade-shaders\Shaders\SMAA.fx':

C:\Games\steamapps\common\PUBG\TslGame\Binaries\Win64\Shader@0x0000023E75B5C8A0(176,1-86): warning X3557: loop only executes for 0 iteration(s), forcing loop to unroll

C:\Games\steamapps\common\PUBG\TslGame\Binaries\Win64\Shader@0x0000023E75B5C8A0(172,98): error X3508: 'F__SMAASearchDiag1': output parameter 'e' not completely initialized

C:\Games\steamapps\common\PUBG\TslGame\Binaries\Win64\reshade-shaders\Shaders\SMAA.fx(245, 8): error: internal shader compilation failedonly on Performance Mode, Config Mode works fine

- JBeckman

Eh probably not entirely correct, could be that other setting so you have that first value for search steps from 1 to 120 (96 in ReShade for older versions of the shader.) and then there's another step value that goes from 1 to 20 I think it was (16 in older versions of said shader.) which might be this one and not the third setting for corner rounding. Wonder why that would break then.

- Insomnia

- MrAlucardDante

Is there any ETA for this, as I am still on 3.0.8?

Also, The releases page on github only provides source code. It could be nice to add executables, so we have a backup when this stuff happens. I don't have visual studio and don't want to download it just to build one app. github.com/crosire/reshade/releases

Thanks

- crosire

- Topic Author

- logicalnerd

- OtisInf

The problem is depth buffer detection. In the game 'Assassin's Creed Origins' (and also in earlier AnvilNEXT64 games, like Unity), the reshade algorithm in void d3d11_runtime::detect_depth_source() picks a false positive at times. But it's very weird, see below.

In-game, I've enabled the DisplayDepth shader for testing. (if you have the game, I'm at the start of the game, in the Siwa village where main character starts to shoot the bow after a cutscene). When I rotate the camera horizontally, the depth buffer is visible at times, and suddenly then disappears, and re-appears at times. So I made a debug build of the latest reshade code and placed a breakpoint in void d3d11_runtime::detect_depth_source(). When it arrives there after the countdown, it has 2 entries in the _depth_source_table. Here comes the weird part: when the depth buffer detection function picks the right one (so it's visible and filled and usable by reshade shaders) the first entry in _depth_source_table has 100K+ vertices and is the obvious choice. When I rotate the camera horizontally, and the depth buffer all of a sudden is empty for reshade shaders (so a white screen), reshade has picked the _second_ entry in _depth_source_table. Debugging shows however that both entries have very low vertex count (the first entry has just 1000 vertices or so, and the second 17000. Way too low for a high poly game like this)

But when I force reshade to always pick the first (so I simply place a break at the end of the for loop iteration), it works OK. so my suspicion is that reshade sometimes doesn't add the proper entry to the _depth_source_table list while it should. the hack I added to force it to use the first of course 'works' in this case because it doesn't change its own depth buffer replacement.

My question is: do you have any hints where I should look / investigate further to see where this is caused? I first thought the game might flip to a lower resolution depth buffer (no) or a deferred context. But a deferred context would also be picked up by reshade from the looks of it and the draw calls should also be added properly to the runtimes. When / IF(!) I find it, I'll of course create a proper PR for you to merge.

(edit) it does seem to flip to a lower-res depth buffer at times (which has the majority of vertices/drawcalls), namely half-res (e.g. 960x600 instead of 1920x1200). Using these fails sometimes to create a replacement and therefore doesn't work always, but some resolutions do work (if I simply add all depth buffers used). Looking into the drawcall log function it does use multiple contexts for rendering.

So basically, it comes down to: if reshade picks the right depth stencil at a given point and you force it to keep using that, it will always work, depth information is always available. However due to whatever issue that's causing this, you let it pick the depth stencil based on the formula currently in the code, it might pick the wrong one and it then the data is 1.0. So when you force it to pick e.g. always the first buffer, the data is always available, even though it says it had just a couple of drawcalls and 1000 or so vertices.

- JBeckman

Assassin's Creed Origins has a ton of compute shader effects so I would guess the cause is very similar, not too sure of a solution though short of manually overriding or changing the hook like how you put that breakpoint there.

I'll see if I can't find that post again but that's essentially what it came down to, one of the light effects in Nier: Automata for that game doing a lot of calls and with how much of AC: Origins is using compute shaders I would guess it's a very similar situation causing this issue.

EDIT: For the half-res view I'm wondering if that couldn't be the depth of field effect, those are usually running at half or even quarter res for performance reasons.

(Could also be ambient occlusion or some shadow effect though.)

EDIT: In general it couldn't hurt to have some more options for controlling some of these behaviors while keeping the generic usage of ReShade but allowing advanced users to configure things a bit for games that are problematic.

But I wouldn't really know what the best way to go about this would be or what sort of settings could be implemented.

- OtisInf

Then in the detect_depth_source function I simply filter out the ones which have a resolution other than the output buffer, so I can check what the numbers are in the buffers. Note that in the list below the 'right' buffer is the second one, not the first. This is of course random, so there's no easy fix for this.

When the right buffer is chosen, and the depth buffer info is available to reshade shaders:

vertices: 62928

drawcalls: 103

[0x0000000199a1e008 {width=1280 height=720 drawcall_count=64 vertices_count 9486

[0x0000000190f1e4c8 {width=1280 height=720 drawcall_count=37 vertices_count 61726}) << CHOSEN

[0x0000000199a19c88 {width=640 height=360 drawcall_count=98 vertices_count} 96993832)

[0x0000000e28c06b48 {width=640 height=360 drawcall_count=29 vertices_count} 19760)When the wrong buffer is chosen (I simply rotate the camera like 90degrees to the right), depth buffer info is empty (1.0)

vertices: 70998

drawcalls: 97

[0x0000000199a1e008 {width=1280 height=720 drawcall_count=73 vertices_count 9672}) << CHOSEN

[0x0000000190f1e4c8 {width=1280 height=720 drawcall_count=43 vertices_count 1054})

[0x0000000199a19c88 {width=640 height=360 drawcall_count=92 vertices_count 105347878})

[0x0000000e28c06b48 {width=640 height=360 drawcall_count=28 vertices_count 24224})- where are the vertices of the second buffer all of a sudden? Here it simply picks the wrong one

- Why are the vertices / drawcalls global variables wrong? the numbers there are the absolute numbers, but these don't add up wrt the buffers in the table.

In AC3 they rendered some elements off-screen in a texture and then blended these on top of the scene (e.g. in the case when they knew there's nothing in front of the elements) so these weren't in the depth buffer. However if I force reshade to pick the 'right' buffer, the data is always complete, so that's not it.

Crosire, you've seen something like this and know perhaps where to look?

- crosire

- Topic Author

I'd suggest you attach RenderDoc ( renderdoc.org/ ) to the game and capture two traces, one where ReShade would choose the second buffer and one where it would choose the first buffer and then compare the render targets and draw calls in RenderDoc to figure out where they differ.

- OtisInf

Ah didn't know that tool existed, sounds indeed like a good point to start with! I'll get back to you on this. (will be next week tho)crosire wrote: Roughly 20,000 triangles on the chosen buffer is a rather low amount of data in the scene, which is suspicious. Especially since the 3rd one has a much higher polygon count. Almost looks like the 3rd one is used during rendering, upscaled into the second one and then some additional stuff depending on view is drawn. What data does the 3rd buffer contain?

I'd suggest you attach RenderDoc ( renderdoc.org/ ) to the game and capture two traces, one where ReShade would choose the second buffer and one where it would choose the first buffer and then compare the render targets and draw calls in RenderDoc to figure out where they differ.

- aeterna426

PS. If more people have the same problem wouldn't be easier to place a link instead of a button? It's just a thought

Edit: Oh.. sorry, sorry... I see this magic button now on the main page (where are my eyes?

Edit 2: Where are my eyes (again!). Sorry, sorry... Of course the interface is still available but in a different way (Shift + F2 while in the game). Now I'm completely satisfied and want to say: big THANK YOU!!!... and sorry for such a mess I made here.

- OtisInf

crosire wrote: Roughly 20,000 triangles on the chosen buffer is a rather low amount of data in the scene, which is suspicious. Especially since the 3rd one has a much higher polygon count. Almost looks like the 3rd one is used during rendering, upscaled into the second one and then some additional stuff depending on view is drawn. What data does the 3rd buffer contain?

I'd suggest you attach RenderDoc ( renderdoc.org/ ) to the game and capture two traces, one where ReShade would choose the second buffer and one where it would choose the first buffer and then compare the render targets and draw calls in RenderDoc to figure out where they differ.

For the life of me, I can't get this to work. Disabled overlays for uplay, ran everything as admin, disabled firewall, tried multiple games, but I can't get renderdoc to capture anything: it launches the game (I also used 'hook into children' as that's the case here) but it never receives anything, also the overlay it should show is never rendered. It also doesn't display the list of processes it's attached to after launch. I suspect the game detects the tool and disables it but I have no evidence to back that up, only that it doesn't work. Perhaps I'm doing something wrong, but after reading teh docs I don't know what that is...

- crosire

- Topic Author

That may very well be true. I know a few applications that prevent usage of both debuggers and graphics debuggers. In times of PIX there even was a builtin API call that would disable PIX for the application calling it (ReShade prevents that when built in debug mode by the way). So it wouldn't surprise me if either RenderDoc has something similar or is simply blocked directly.OtisInf wrote: I suspect the game detects the tool and disables it but I have no evidence to back that up, only that it doesn't work.

- OtisInf

It would be a very easy way to rip models, textures and shaders out of the game indeed. I'd block it if I were a game dev.crosire wrote:

That may very well be true. I know a few applications that prevent usage of both debuggers and graphics debuggers. In times of PIX there even was a builtin API call that would disable PIX for the application calling it (ReShade prevents that when built in debug mode by the way). So it wouldn't surprise me if either RenderDoc has something similar or is simply blocked directly.OtisInf wrote: I suspect the game detects the tool and disables it but I have no evidence to back that up, only that it doesn't work.

On to the matter of hand though. I put some breakpoints in the draw methods which don't have vertex count logging and they do call DrawIndexedInstancedIndirect and DrawIndexedIndirect. They don't use DrawAuto. It might be the Indirect method calls are the reason we don't see the right numbers, as the primitives drawn by these methods are likely created by their compute shaders. I'll try to grab the buffer description and from there try to grab the # of vertices in the buffer to add it to the draw call log functionality so these vertices are tracked as well.

(edit) adding buffer desc retrieval in both methods and doing a ballpark calculation on the # of vertices (buffer length / 4) it does seem to fix it for the most part, but it's of course terribly slow as it retrieves the buffer desc every time. 'For the most part' means there are still occasions it fails and reshade picks the wrong buffer. It might work now because it's very slow (< 10fps in 1280x720 with a 1070

(edit) hmm, this isn't a good path, the buffer is 6062080 bytes big, meaning more than 1.5M vertices if it's filled, but that's of course to be seen, there's no indicator how big the data inside the buffer is...

stride is 0 so there's no way to know how big the data elements are. It might very well be there's a length value at the start, but perhaps it's at a different offset, who knows...

stride is 0 so there's no way to know how big the data elements are. It might very well be there's a length value at the start, but perhaps it's at a different offset, who knows...(edit) Hmm, even just calling on_draw_call by passing the context along with a constant, e.g. 16, is slow. Likely due to the mutex as it's multithreaded code which then becomes single threaded in that method, or due to the requesting of the stencilbuffer... very strange. In any case, I think this is the reason we miss a lot of calls/vertices and therefore the buffer selection is off. Crosire you have ideas how to fix it / proceed? E.g. tweak the current algo with the numbers that are tracked or other ways, e.g. by obtaining the extra vertex info from the buffers somehow?

(edit) I think I see the bug, bear with me here

- in on_draw_call, you increase the _drawcall count with 1 (which represents the total # of drawcalls in the frame, for the ppl reading) and store this value also in the current depthstencil struct.

- in detect_depth_source you have a delay so every 30 frames it will detect the depth source, otherwise it will simply keep what we got. Say we are at the 31st frame and will detect the depth source.

- you're now going to do some calculations which stencilbuffer to pick. You compare the calculated drawcall count in the current stencilbuffer struct with ... the global _drawcall value. But that's the same value you stored in the last call...

I think your intentions are that you determine the fraction of the total draw calls in _drawcalls is taken by the stencilstruct you're looking at in detect_depth_source, but how you calculate it at the moment is IMHO not going to work. What should be done is: increase drawcall_count with 1 in the stencilstruct in ondrawcall, and clear all structs' in detect_depth_source, even if the delay is active. Then you can divide with _drawcalls properly and determine the % of drawcalls for that buffer in the current frame. Will test this to see if this fixes it.

(edit) well, it at least makes the numbers match but they're now so low it makes little sense (7 draw calls, 34 vertices, wtf..

(edit) the DispatchIndirect is the method that's also part of this set of methods. You obviously already know this but since I'm being complete here it goes: these methods are simply passing the data along directly instead of requiring the CPU to call their non-indirect variants, with the requirement that the arguments for the methods are at the front of the buffer passed as argument. So DrawIndexedInstancedIndirect, has the arguments which are equal to DrawIndexedInstanced, at the front of the buffer passed to DrawIndexedInstancedIndirect. Now, it's theoretically possible to obtain this data, wrap the buffers created with a custom class like with device and context, and grab the GUIDs used to set the private data, then use that info to call GetPrivateData to obtain the buffer pointer and read the arguments which then can be used to calculate vertices count for the stencilbuffer structs. But I fear that read is going to be a serious performance problem as it will create a stall (read from a buffer by the CPU), and this is high-perf code.

So i.o.w.: doing this through code is likely going to be problematic. It's far easier to simply let the user pick the right buffer and stick with that, as it's likely going to be the same one throughout the game.

- curryman

- NayaLotus

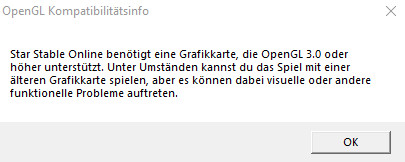

I have a question, the game i want to use ReShade with (Star Stable Online) changed to OpenGL a year ago. The problem is now that when i have ReShade installed, this pops up:

English translation:

" Star Stable Online needs a graphics card who supports OpenGL 3.0 or higher. You might can play with an older graphics card but it can cause visual or other functional problems"

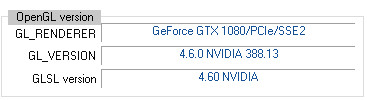

My graphic card supports the newest OpenGL version

So it seems like ReShade drags the version down to something under 3.0 . Is it possible to make ReShade compatible with a higher OpenGL version?

I can't really use ReShade with Star Stable like this, it's bugging like hell ;u;

I can't really use ReShade with Star Stable like this, it's bugging like hell ;u;- crosire

- Topic Author

- OtisInf

It's IMHO not that hard to fix it, as D3D11 is already designed to be used multi-threaded, the code just has to be moved a bit: things that are done on a context can stay there till it's either pulled from the instance acting like the immediate context OR when FinishCommandList is called. I made a proof of concept for this which is still using the single-threaded bottleneck in on_draw_call and therefore dogslow but it now has the proper numbers (13K drawcalls on 1 depthstencil, 200K+ vertices, the other almost none) on my testgame, AC:Origins.

My idea is this:

in Device

- track each created depth stencil buffer with its size.

in Context

- Track each depth stencil bind change so the active depth stencil (or none) is tracked. Store this inside the context object.

- Each set depth stencil is to be tracked inside the context, not the runtime. This leads to lock-free tracking.

- On a draw call on a context, if an active depth stencil is set add 1 to the # of drawcalls on that depthstencil in the context and optionally the # of vertices

- When FinishCommandList is called, the depthstencil-counter map is stored in the runtimes under the commandlist pointer, so they can be merged every time the command list is executed.

- On ExecuteCommandList, the depthstencil-counters map is pulled from the runtimes for the commandlist pointer and merged into the context's map so it looks like the commands are called on this context. This leads in the end to all info that is effectively run on the immediate context inside the immediate context. As this is single-threaded code, no lock is needed anywhere, as the contexts are single threaded

- the immediate context has all the numbers now. Just pull the depth stencil-counters map from it and do:

- per entry in the map, pull the desc from the device tracker which stored size info per created depth stencil. While this info is in the device, it's single threaded code: we're in present, on the immediate context, this can't run in multiple threads at once, so a lock could be avoided here too

- if the desc contains a resolution not matching our own, ignore, if it matches, check the draw call count. If it's the highest, pick that one.

thoughts?

- Martigen

It has a weird effect of the depth buffer being all white or all black depending if you set REVERSED or not, but if you enable MXAO all the same you get a bar down one side shaded by MXAO and a square outline just near it. Almost looks like some kind of broken HUD overlay. I wonder if here, too, the wrong buffer is being grabbed -- and maybe your test code could be effective there too.